Data-intensive Computing

Data-Intensive Computing Research and EducationProject partially funded by National Science Foundation Grant NSF-DUE-CCLI-0920335: PI B. Ramamurthy, $249K.

An Excerpt from the funded grant (2009-2014)

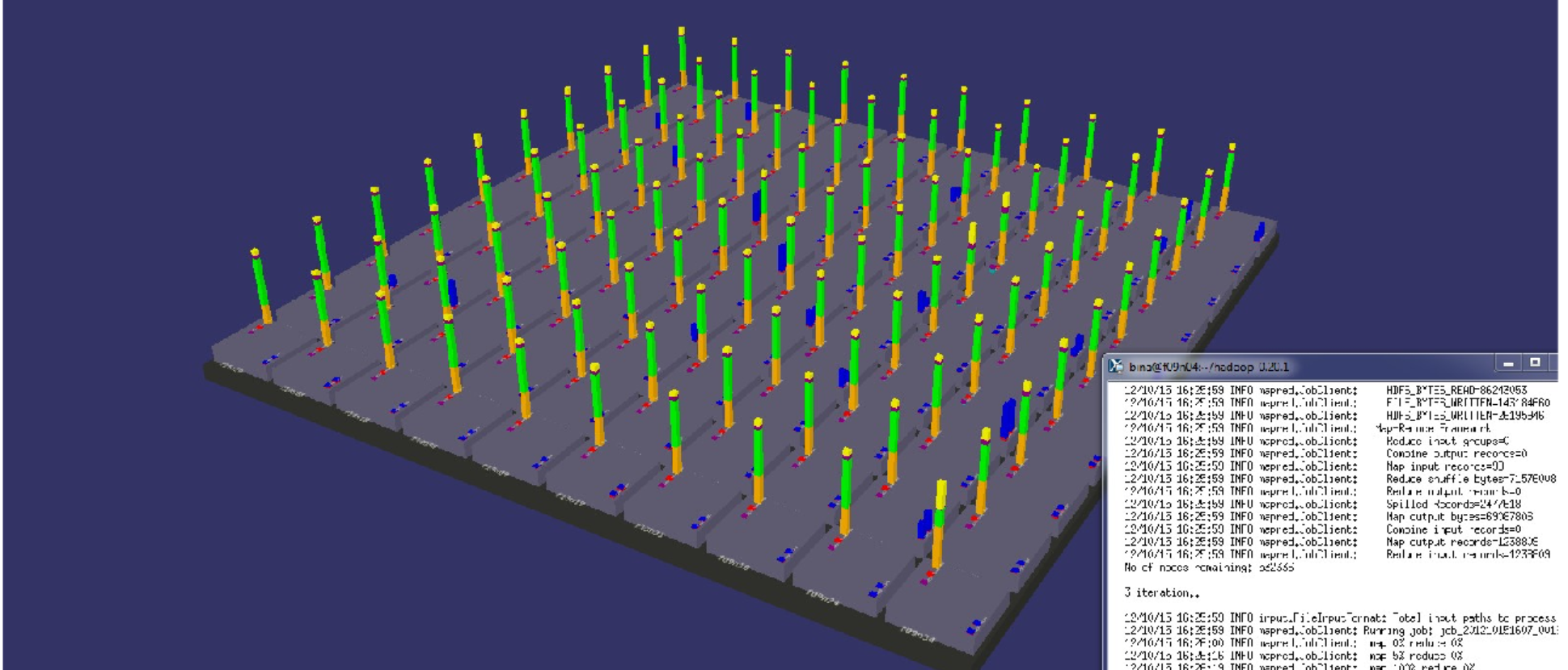

Data-intensive computing has been receiving much attention as a collective

solution to address the data deluge that has been brought

about by tremendous advances in distributed systems and

Internet-based computing. An innovative programming models

such as MapReduce and a peta-scale distributed file system to

support it have revolutionized and fundamentally changed

approaches to large scale data storage and processing.

These data-intensive computing

approaches are expected to have profound impact on any

application domain that deals with large scale data, from

healthcare delivery to military intelligence. A new forum

called big-data computing group

has been formed by a consortium of industrial stakeholders and

agencies including NSF and CRA (Computing Research Associates)

to promote wider dissemination of the big-data solutions and

transform mainstream applications. Given the omnipresent

nature of large scale data and the tremendous impact they have

on a wide variety of application domains, it is imperative to

ready our workforce to face the challenges in this area. This project aims to improve

the big-data preparedness of diverse STEM

audience by defining a comprehensive framework for education

and research in data-intensive computing.

A new certificate program in data-intensive computing

has been

approved by SUNY and is offered by the University. The details are in the catalog page here.