CSE4/587 Spring 2019

Data-intensive Computing = Data Science + Big Data Infrastructure

| Date | Topic | Chapter | Classnotes | To do: |

|---|---|---|---|---|

| 1/28 | Course goals and plans | Course description | ||

| 2/5 | Data-intensive computing | What is DI? | DI | Read Ch.1 of DS book |

| Datascience Roadmap | What is DS? | DS1 | AWS, Jupyter, R-Studio | |

| 2/7 | Exploratory Data Analysis (EDA) | R Intro | EDA | Lab 1 |

| 2/12 | Data cleaning: An important first step | STModels, CSAlgs | Cleaning | Data |

| 2/14 | Demo on models | STModels, CSAlgs | Model fitting and Eval | Demo |

| 2/19 | Discussion on models | LM, KMeans and Knn | Model fitting and Eval | Demo |

| 2/21 | Review of data models | LM, KMeans and Knn | Review | Read Ch.1-3 |

| 2/21 | Data Infrastructures | Hadoop | Hadoop Basics | MR References |

| 2/28 | Big Data Algorithms | MapReduce | Mapreduce Basics | MR References |

| 3/5 | Hands-on MR | MapReduce | Mapreduce code and execution | MR-Hadoop documents |

| 3/7 | MR Code | MapReduce | Mapreduce algorithm and code: Ch 2 | MR-Hadoop documents |

| 3/13 | Midterm Review | Lab 2 Discussion | Exam 1 Review | Lab 2 |

| 3/26 | Mid-term Exam | 3.30-4.50PM | Knox 20 | Review |

| 3/28 | Co-occurrence | Ch.3 LD | Word Co-occurrence Analysis | Lab2 Discussion |

| 4/4 | Graph analysis | Ch.5 LD | Graph and network Analysis | MR.Graph |

| 4/11 | Spark | Notes | Data Analytics with Spark | Apache Spark |

| 4/23 | Spark Demo | Spark Installation | Data Analytics with Spark | Demos |

| 4/25 | Bayes | Bayesian Analysis | Bayesian Analysis | Exam topic |

| 4/29 | Logistic Regression | Notes | Logit Analysis | Exam topic |

| 5/2 | Final Review | Notes | Review | Exam topics |

| 5/7 | Evaluation of classifictaion metrics | Notes | Evaluate Classifiers | Exam topic |

| 5/16 | Final Exam | 3.30-6.30PM | Konx 20 | Review |

1. "Hmm..What is Data-intensive Computing?"

The phrase was initially coined by National Science Foundation (NSF).

This particular definition sets a very nice context for our course.

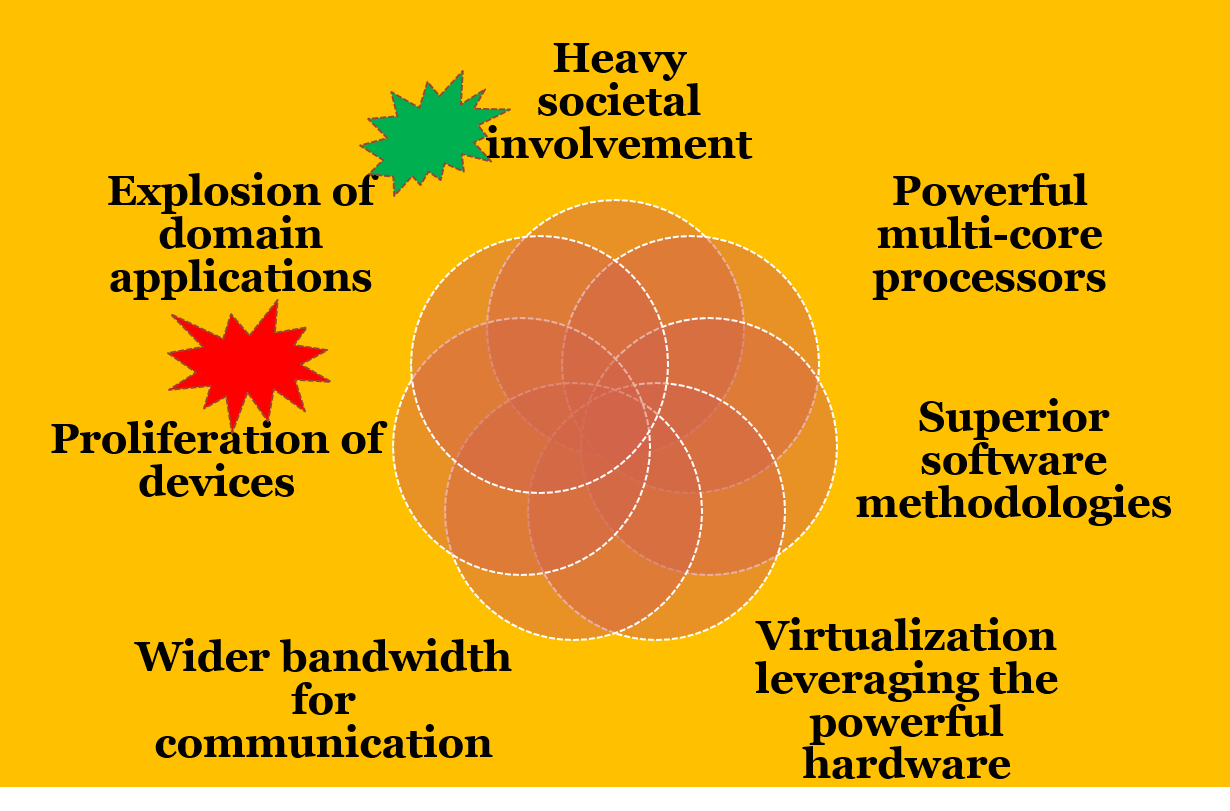

Before we go further let's set the context. We are living in a golden era in computing.

2. Given this context, how can you characterize data..big data?

Volume, velocity, variety, veracity (uncertainty) (Gartner, IBM) as illustarted here

3. How are we addressing the increased complexity in data?

Tremendous advances have taken place in statistical methods and tools, machine learning and data mining approaches, and internet based dissemination tools for analysis and visualization. Many tools are open source and freely available for anybody to use.More importantly, newer storage models, processing models, big data analytics and cloud infrastructures have emerged.

4. Okay. Can you give us some examples of data-intensive applications?

- Search engines

- Recommendation systems:

CineMatch of Netflix Inc. movie recommendations

Amazon.com: book/product recommendations - Biological systems: high throughput sequences (HTS)

Analysis: disease-gene match

Query/search for gene sequences - Space exploration

- Financial analysis

5. What about the scale of data?

Intelligence is a set of discoveries made by federating/processing information collected from diverse sources. Information is a cleansed form of raw data.

For statistically significant information we need reasonable amount of data.

For gathering good intelligence we need large amount of information.

As pointed out by Jim Grey in the Fourth Paradigm book enormous amount of data is generated by the millions of experiments and applications. Thus intelligence applications are invariably data-heavy, data-driven and data-intensive.

Lets discuss algorithm vs data. "More data beats better algorithms".

6. How about data applications? Characteristics of intelligent applications

Google search: How is different from regular search in existence before it? It took advantage of the fact the hyperlinks within web pages form an underlying structure that can be mined to determine the importance of various pages.

Restaurant and Menu suggestions: instead of “Where would you like to go?” “Would you like to go to CityGrille”? Learning capacity from previous data of habits, profiles, and other information gathered over time.

Collaborative and interconnected world inference capable: facebook friend suggestion Large scale data requiring indexing

…Do you know amazon is going to ship things before you order?

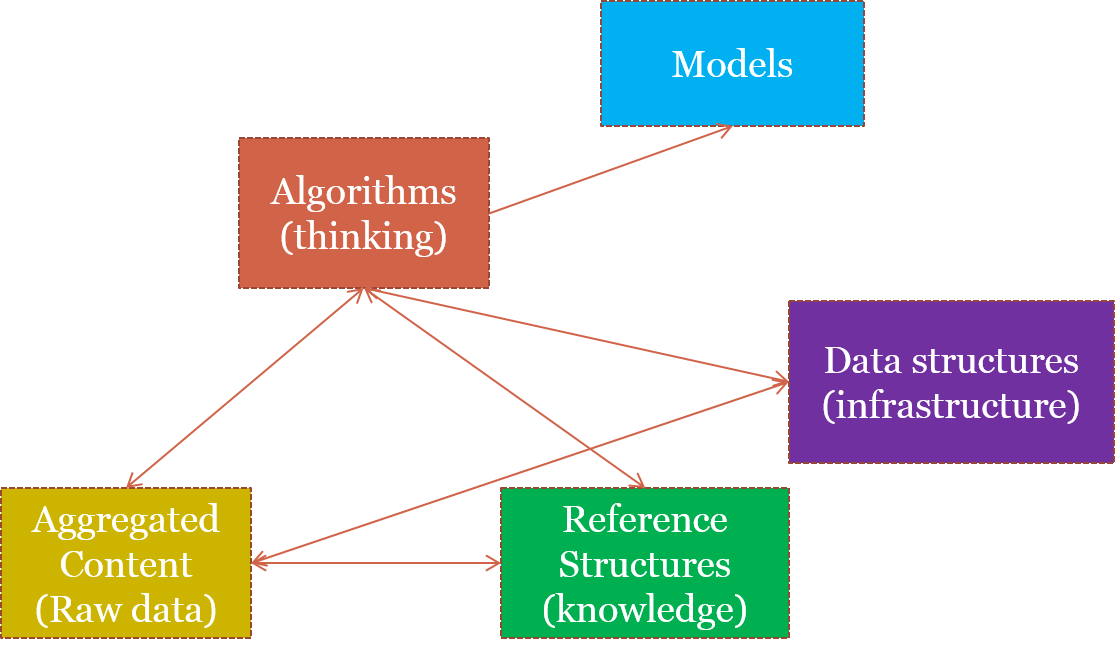

7. Data-intensive Computing Model (From Lin & Dyer)

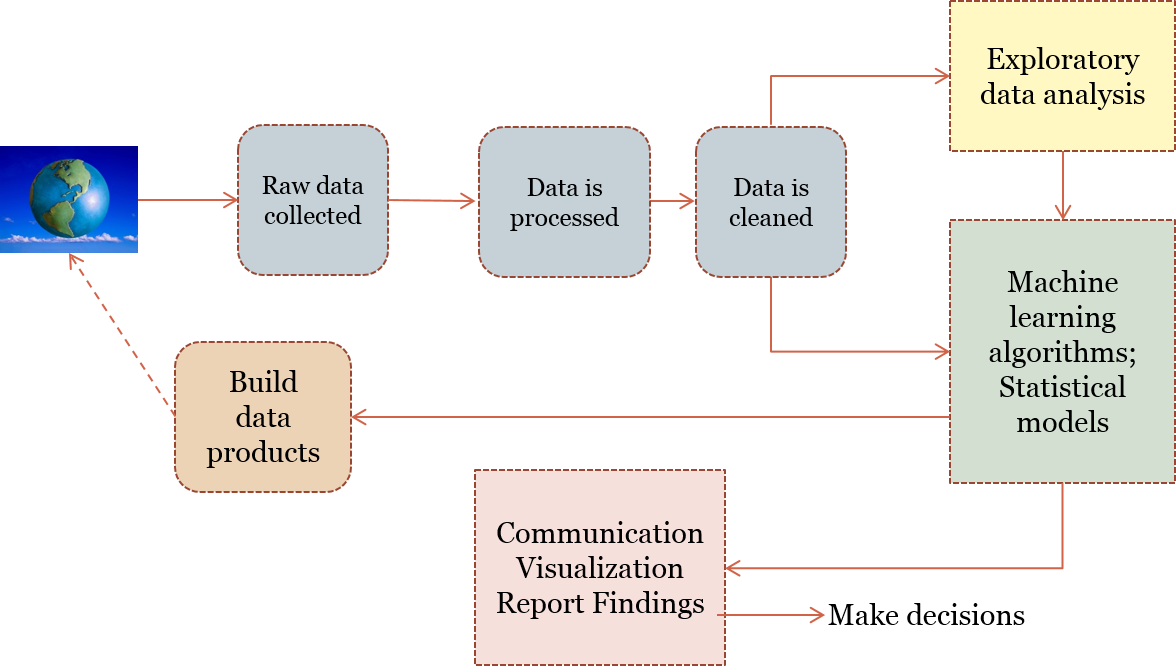

8. Data Science Process I(Adapted from Doing Data Science)

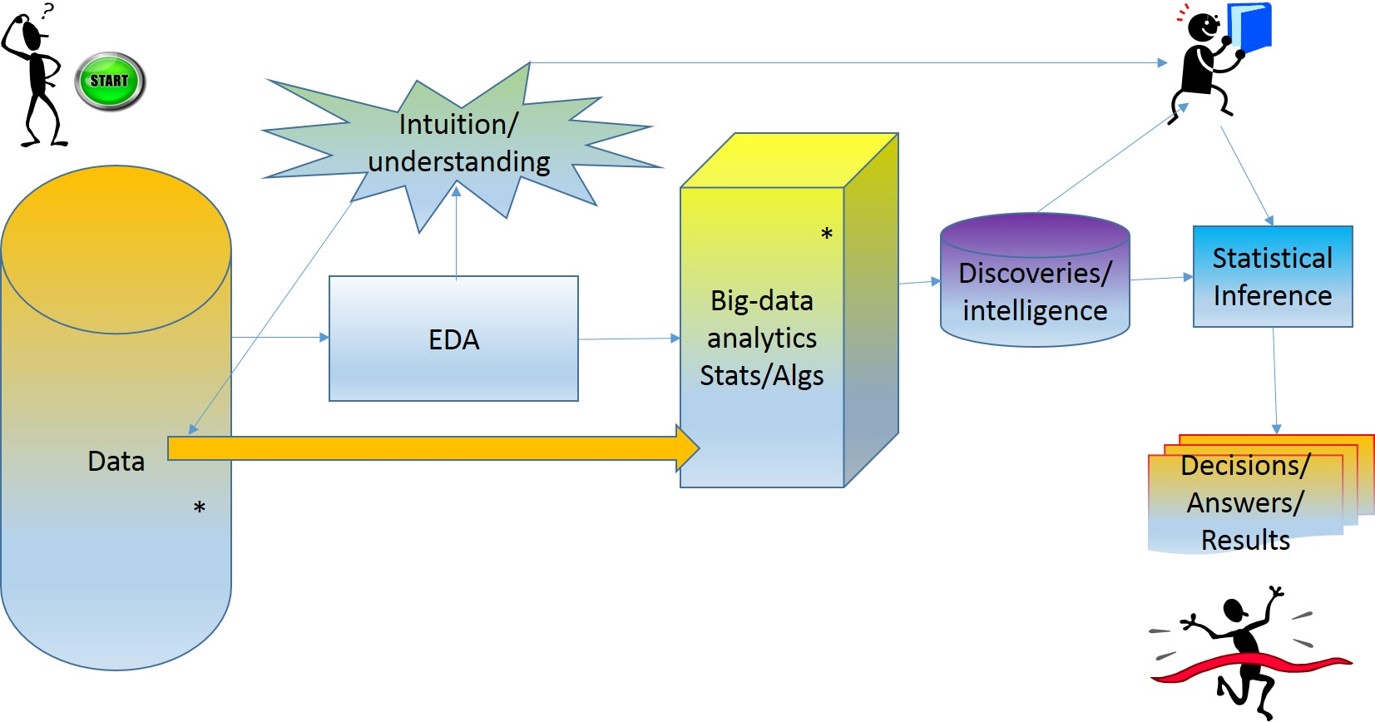

9. Data Science Process II

10. How do we plan to do it?

Small units of learning: conceptual and applied, reinforced by the lab assignment. In the recommeded text book, the author Field Cady organizes the it into three parts:

- The core: Skills that are absolutely indispensible at any level of data science.

- The additional core: Skills you need to know, but may not use it all the time.

- Stuff good to know: Expansion of the core topics and theoretical background.

Exploratory data analysis

Today we will explore our Lab1. We will also explore R language for EDA and a tool that supports R, the R Studio. Here is the R presentation and here are the lab1 details.

Data Cleaning (and Transformation)

Today's plan:

- Lets review Lab1 ques and answers

- Intorduction to RStudio and some of its features

- Data cleaning of real estate sales data

- Data for today's demo Data cleaning is not easy..

Please see recitaion and office hours schedule posted on Piazza

We are in Chapter 2 of Doing Data Science text. Today we will look at Data Cleaning and EDA for another real business: http://www.realdirect.com It is "Rethinking real estate": how it is bought/sold. This is a real estate business based on a subscription model. Traditional model involves a broker and a hefty commision for the broker selling the house (anywhere between 7-10%). RealDirect will provide.

- Broker support and interface and data-analytics intelligence

- Seller interface: sellers "subscribe" to realdirect (flat fee $365 month)

- Sellers get brokers at a lower rate (1%-2%)

- Buyers can use realdirect interface/platform to search, negotiate, and buy the available listing. (active, offer made, in contract, offer rejected etc.)

Cleaning Data: Basic steps

- Convert unknown file types to csv. For example, excel does read well into R, so convert it to csv and read it in as csv file.

- Remove characters such as $ and , embedded within numeric data

- Turn all (or select) variable names as well as values to lowercase for easy comparison and processing

- Remove outliers, zeros and N/A (blanks)

- Transform characters to numeric code

- Binning or categorizing data: add variable(s) that will "bin" or "categorize" orginal data. Example: age$Cat (-Inf,0, 10,30,50,inf)

- Add new information columns: "class" or "levels"

- Remove unwanted observations, and variables

- Add meaningful labels to support charting (this is more an EDA step)

- Turn character/quantitative data into factors

Algorithms for Data Processing I

Today's plan:

- Lets review Lab1 questions and answers: RShiny, web server, optional.

- Algorithms for data processing: we will explore some of these today

- Linear regression (linear model): a versatile algorithmic approach

- Synthesizing data using R methods; contrarian "lm" demo

Please see recitaion and office hours schedule posted on Piazza

Motivation 1 for "lm": Here is a list of Top ten machine learning algorithms.

Motivation 2 for "lm": Bloomberg's oil price prediction method.

We are in Chapter 3. Read Chapter 3. Three important data processing algorithms are discussed in this chapter. We will look into three of these today: Linear Regression. Problem 1: Consider the dataS = {(x,y)= (1,25), (10,250), (100,2500), (200,5000)}What next? What is value for X = 40? We observe that

- There is a linear pattern.

- Coefficient relating x and y is 25

- Seems deterministic

- and y = 25*x is the relationship, an obvious linear pattern.

Linear model is defined by the functional form:

y = β0 + β1*x

- A simple analysis of "cars" data already available in R environment Another demo to understand the evaluation metric for an "lm" . Goal is to visualize and understand lm and its statistics.

- The second demo is on synthetic data to understand the importance of the evalaution metrics and also to understand "overfitting"

- Cleaning and getting a data frame

- Plot the data points

- Visually assess the data plot

- Model using "lm"

- "lm" by default seeks to find a trend line that minimizes the sum of squares of the vertical distances between the approximated or predicted and observed y's.

- We want to learn both the "trend" and the "variance"

- Evaluate the measure of goodness of our model in R-squared and p: R-square measures the the proportion of variance. p-value assesses the significance of the result. We discuss both these measures: we want R-sqaured to high (0.0-1.0 range) and p to be low or <0.05. R-squared is 1-(total predicted error-squared/total mean error squared) (see p.67)

- Always evalaute the error and goodness of fit using standard methods: sum of sqaures is a operation in the evalaution process. .

See Demo code here

Algorithms for Data Processing II: Parameters

Today's plan:

- Evaluation of goodness of fit: with R Studio and LM

- Pick it up where we left off: K-means -- Hand trace an example

- K-means experiment stats: goodness of fit, number of iterations, convergence, termination criteria, closeness criteria

- K-nn: complete Iris demo, and discuss common mis-iterpretations

Please see recitaion and office hours schedule posted on Piazza

See here for sample questions Sample

See Demo code here

Data Infrastructures

Introduction

In the 1990's during the early days of the Internet, the access mode for home computing was through a humungous modem that made this characteristic noise. People had one of the two or three service providers: Prodigy, or Compuserve and probably the web and the search was the only application. Even that was slow. The "web page" was not fancy since most people had only line-based terminals for accessing the Internet. One of the popular browsers was called "Mosaic". When you query for the something it took such a long time that you can clean up your room by the time the query-results arrived.

In late 1990's Larry Page and Sergei Brin (Standford graduate) students worked on the idea considering the web as a large graph and ranking the nodes of this connected web. This became the core idea for their thesis and the company they founded, Google. Google that has since become the byword for "search". The core idea is an algorithm called "Pagerank". This is well explained in a paper [1] written about this thesis.

Until Google came about the focus was on improving the algorithms and the data infrastructure was secondary. There were questions about the storage use for supporting this mammoth network of pages and their content that are being searched. In 2004 two engineers from Google presented an overview paper [2] of the Google File System (GFS) and a novel parallel processing algorithm "MapReduce". A newer version of the paper is available at [3] .

Of course, Google did not release the code as open source. Doug Cutting an independent programmer who was living in Washington State reverse engineered the GFS and named it after the yellow toy elephant that belonged to his daughter. Yahoo hired him and made Hadoop open source.

In 2008 Yahoo, National Science Foundation and Computing Community Consortium gathered together educators, industries, and other important stakeholders for the inaugural Hadoop Summit. Look at the list of attendees here I learned Hadoop from Doug Cutting. It is imperative that we study Hadoop and MapReduce together to learn their collective contribution.

Let us understand the foundation of the Big Data Infrastructure that has become integral part of most computing environments. We will approach this study by processing unstructured data as discussed in text [4] .

References

- K. Bryan and T. Leise. The $25,000,000,000 Linear Algebra Behind Google. here.

- Dean, J. and Ghemawat, S. 2004. MapReduce: Simplified data processing on large clusters. In Proceedings of Operating Systems Design and Implementation (OSDI). San Francisco, CA. 137-150. MR, last viewed 2017.

- Newer version in ACM of the above paper here.

- J. Lin and C. Dyer. Data-Intensive Text Processing with MapReduce, Synthesis Lectures on Human Language Technologies, 2010, Vol. 3, No. 1, Pages 1-177, (doi: 10.2200/S00274ED1V01Y201006HLT007). Morgan & Claypool Publishers. An online version of this text is also available through UB Libraries since UB subscribes to Morgan and Claypool Publishers. Online version available here